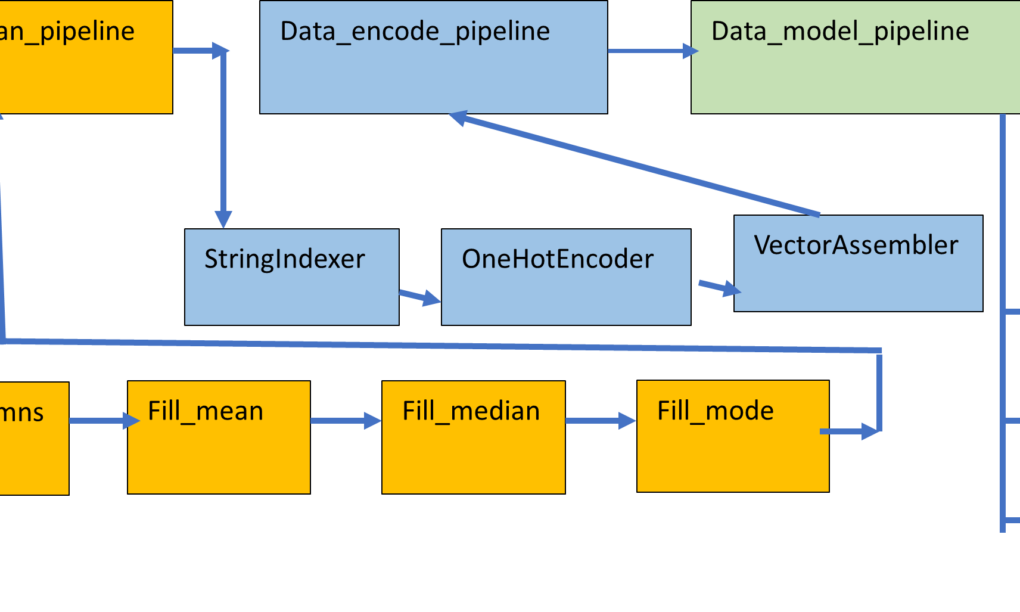

In this article I am going to illustrate the process to perform machine learning classification in Pyspark with Pipeline. A pipeline is a sequence of stages used to perform a specific task. In the pipeline , the output of a task in a stage acts as an input to the next stage of the pipeline. The machine learning pipeline is composed of multiple stages, like data cleaning, filling of missing values, encoding, modelling and evaluation. The pipeline is a more organised and structured way to code and a machine learning pipeline helps to speed up the process by automating the workflows and synchronizing them together.

What is Bigdata?

Big data refers to vast, complex datasets from various sources, including social media, sensors, and more. The features of these complex datasets can be referred to as the 5 V’s:-

Volume: It’s massive, often terabytes to exabytes, beyond traditional systems. The companies which process or analyze huge numbers of transactions per unit time e.g. Walmart falls in this category

Velocity: Data is generated in high speed like social media data or sensor data

Variety: It’s diverse, from structured databases to unstructured text and images.

Veracity: Dealing with uncertain or unreliable data quality. The data available from the web is noisy and chaotic, comprising of missing values or inconsistent data.

Value: Extracting insights for data-driven decisions. The data can generate valuable business insights required to take important decisions or provide Business intelligence.

Tools like Hadoop, Spark, NoSQL databases, and machine learning help analyze big data for industries like business, healthcare, finance, and marketing.

Introduction to Spark

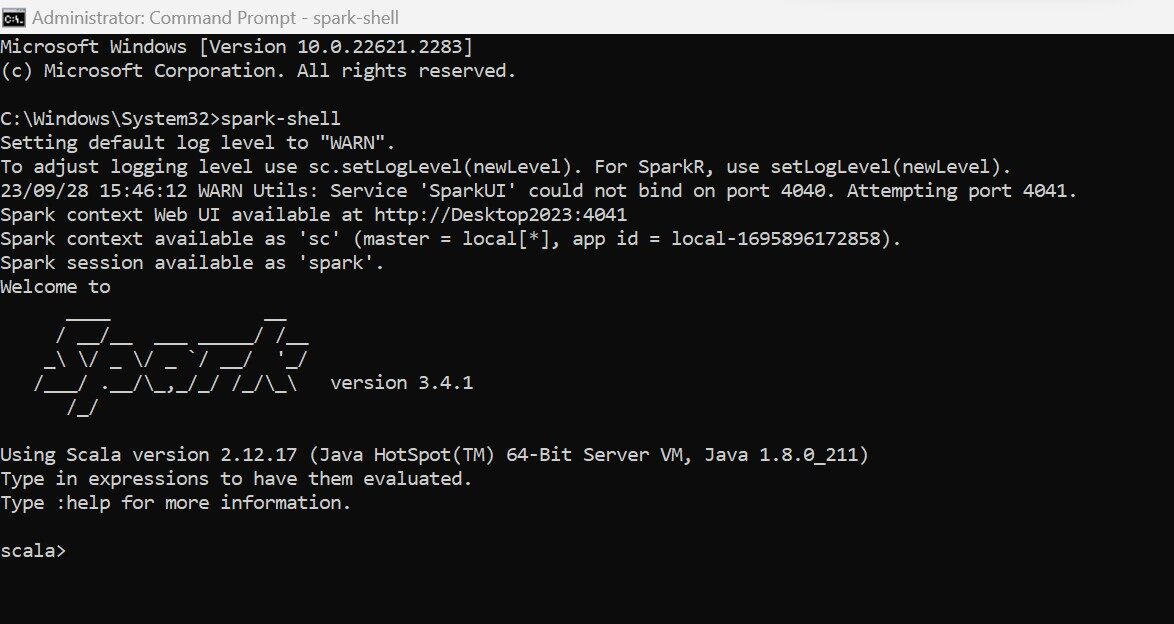

Spark is an open source Bigdata analytics framework which started in 2009 as a small project in Berkeley’s lab to improve the performance of Hadoop. Spark uses in-memory computation in contrast to Hadoop which write all the temp files in the persistent storage. Due to the in-memory computation , Spark is 100 times faster than Hadoop Map reduce. Spark emerged as an Apache popular Project in February, 2014.

Spark does not have its own storage, It can use Hadoop HDFS , AWS, GCP or any other cloud storage. Spark provides an user friendly API in multiple programming languages like Python, Scala, R and Java.

SparkContext

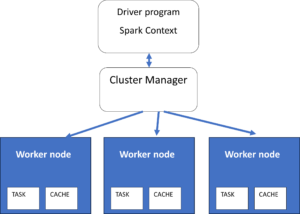

Spark has a master slave architecture. It has a master node or Driver which acts as a coordinator for multiple worker nodes or executors which perform actual processing on the data. SparkContext (sc) is the entry point to work with the master node of the Spark or Spark Driver. It is used to create RDDs (Resilient Distributed datasets) which are chunks of immutable datasets formed by partitioning the Datasets imported in Spark. Parallel processing is performed on RDDs where-ever possible for generating summarisations. In Spark , the SparkContext or SC is automatically generated by the environment. In Pyspark, the SparkContext need to be initialized explicitly.

Spark

The spark variable is the entry point to the Spark Data Frame API. It can be created as an instance of the SparkSession. The Spark variable can be used to execute SQL queries in Spark.

click on thumbnail to open the full image

Pyspark

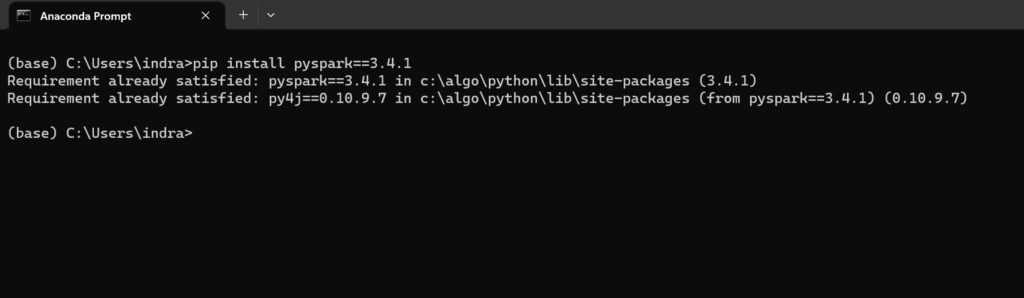

Pyspark is a Python API available to work with Spark. One need to import the pyspark module in Python and then create the environment for working with the Spark framework. The instances of SparkContext and SparkSession need to be created explicitly with Python code. For successful working of pyspark, Spark should be installed in your machine and pyspark should also be installed , which has the same version as your Spark shell installed in your machine.

Dataset

The dataset used is titanic dataset from kaggle. It has both train and test datasets

https://www.kaggle.com/competitions/titanic/data

Continue reading “Machine learning pipeline with pyspark | pyspark ml pipeline example”